Update: Please see this post if you’re wondering why I edited this piece.

I read a recent story in the Guardian from Bruce Schneier titled “Be Careful When You Come To Put Your Trust In the Clouds” in which he suggests that Cloud Computing is “…nothing new.”

Fundamentally it’s hard to argue with that title as clearly we’ve got issues with security and trust models as it relates to Cloud Computing, but the byline seems to be at odds with Schneier’s ever-grumpy dismissal of Cloud Computing in the first place. We need transparency and trust: got it.

Many of the things Schneier says make perfect sense whilst others just make me scratch my head in abstract. Let’s look at a couple of them:

This year’s overhyped IT concept is cloud computing. Also called software as a service (Saas), cloud computing is when you run software over the internet and access it via a browser. The salesforce.com customer management software is an example of this. So is Google Docs. If you believe the hype, cloud computing is the future.

Clearly there is a lot of hype around Cloud Computing, but I believe it’s important — especially as someone who spends a lot of time educating and evangelizing — that people like myself and Schneier effectively separate the hype from the hope and try and paint a clearer picture of things.

To that point, Schneier does his audience a disservice by dumbing down Cloud Computing to nothing more than outsourcing via SaaS. Throwing the baby out with the rainwater seems a little odd to me and while it’s important to relate to one’s audience, I keep sensing a strange cognitive dissonance whilst reading Schneier’s opining on Cloud.

Firstly, and as I’ve said many times, Cloud Computing is more than just Software as a Service (SaaS.) SaaS is clearly the more mature and visible set of offerings in the evolving Cloud Computing taxonomy today, but one could argue that players like Amazon with their Infrastructure as a Service (IaaS) or even the aforementioned Google and Salesforce.com with the Platform as a Service (PaaS) offerings might take umbrage with Schneier’s suggestion that Cloud is simply some “…software over the internet” accessed “…via a browser.”

Overlooking IaaS and PaaS is clearly a huge miss here and it calls into question the point Schneier makes when he says:

But, hype aside, cloud computing is nothing new . It’s the modern version of the timesharing model from the 1960s, which was eventually killed by the rise of the personal computer. It’s what Hotmail and Gmail have been doing all these years, and it’s social networking sites, remote backup companies, and remote email filtering companies such as MessageLabs. Any IT outsourcing – network infrastructure, security monitoring, remote hosting – is a form of cloud computing.

The old timesharing model arose because computers were expensive and hard to maintain. Modern computers and networks are drastically cheaper, but they’re still hard to maintain. As networks have become faster, it is again easier to have someone else do the hard work. Computing has become more of a utility; users are more concerned with results than technical details, so the tech fades into the background.

<sigh> Welcome to the evolution of technology and disruptive innovation. What’s the point?

Fundamentally, as we look beyond speeds and feeds, Cloud Computing — at all layers and offering types — is driving huge headway and innovation in the evolution of automation, autonomics and the applied theories of dealing with massive scale in compute, network and storage realms. Sure, the underlying problems — and even some of the approaches — aren’t new in theory, but they are in practice. The end result may very well be that a consumer of service may not see elements that are new technologically as they are abstracted, but the economic, cultural, business and operational differences are startling.

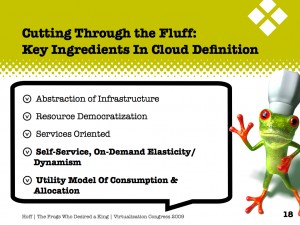

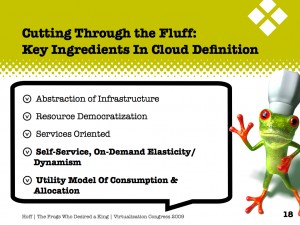

If we look at what makes up Cloud Computing, the five elements I always point to are:

Certainly the first three are present today — and have been for some while — in many different offerings. However, combining the last two: on-demand, self-service scale and dynamism with new economic models of consumption and allocation are quite different, especially when doing so at extreme levels of scale with multi-tenancy.

So let’s get to the meat of the matter: security and trust.

But what about security? Isn’t it more dangerous to have your email on Hotmail’s servers, your spreadsheets on Google’s, your personal conversations on Facebook’s, and your company’s sales prospects on salesforce.com’s? Well, yes and no.

IT security is about trust. You have to trust your CPU manufacturer, your hardware, operating system and software vendors – and your ISP. Any one of these can undermine your security: crash your systems, corrupt data, allow an attacker to get access to systems. We’ve spent decades dealing with worms and rootkits that target software vulnerabilities. We’ve worried about infected chips. But in the end, we have no choice but to blindly trust the security of the IT providers we use.

Saas moves the trust boundary out one step further – you now have to also trust your software service vendors – but it doesn’t fundamentally change anything. It’s just another vendor we need to trust.

Fair enough. So let’s chalk one up here to “Cloud is nothing new — we still have to put our faith and trust in someone else.” Got it. However, by again excluding the notion of PaaS and IaaS, Bruce fails to recognize the differences in both responsibility and accountability that these differing models brings; limiting Cloud to SaaS while simple for cute argument does not a complete case make:

To what level you are required to and/or feel comfortable transferring responsibility depends upon the provider and the deployment model; the risks associated with an IaaS-based service can be radically different than that of one from a SaaS vendor. With SaaS, security can be thought of from a monolithic perspective — that of the provider; they are responsible for it. In the case of PaaS and IaaS, this trade-off’s become more apparent and you’ll find that this “outsourcing” of responsibility is diminished whilst the mantle of accountability is not. This is pretty important if you want ot be generic in your definition of “Cloud.”

Here’s where I see Bruce going off the rails from his “Cloud is nothing new” rant, much in the same way I’d expect he would suggest that virtualization is nothing new, either:

There is one critical difference. When a computer is within your network, you can protect it with other security systems such as firewalls and IDSs. You can build a resilient system that works even if those vendors you have to trust may not be as trustworthy as you like. With any outsourcing model, whether it be cloud computing or something else, you can’t. You have to trust your outsourcer completely. You not only have to trust the outsourcer’s security, but its reliability, its availability, and its business continuity.

You don’t want your critical data to be on some cloud computer that abruptly disappears because its owner goes bankrupt . You don’t want the company you’re using to be sold to your direct competitor. You don’t want the company to cut corners, without warning, because times are tight. Or raise its prices and then refuse to let you have your data back. These things can happen with software vendors, but the results aren’t as drastic.

…

Trust is a concept as old as humanity, and the solutions are the same as they have always been. Be careful who you trust, be careful what you trust them with, and be careful how much you trust them. Outsourcing is the future of computing. Eventually we’ll get this right, but you don’t want to be a casualty along the way.

So therefore I see a huge contradiction. How we secure — or allow others to — our data is very different in Cloud, it *is* something new in its practical application. There are profound operational, business and technical (let alone regulatory, legal, governance, etc.) differences that do pose new challenges. Yes, we should take our best practices related to “outsourcing” that we’ve built over time and apply them to Cloud. However, the collision course of virtualization, converged fabrics and Cloud Computing are pushing the boundaries of all we know.

Per the examples above, our challenges are significant. The tech industry thrives on the ebb and flow of evolutionary punctuated equilibrium; what’s old is always new again, so it’s important to remember a couple of things:

- Harking back (a whopping 60 years) to the “dawn of time” in the IT/Computing industry making the case that things “aren’t new” is sort of silly and simply proves you’re the tallest and loudest guy in a room full of midgets. Here’s your sign.

- I don’t see any suggestions for how to make this better in all these rants about mainframes, only FUD

- If “outsourcing is the future of computing” and we are to see both evolutionary and revolutionary disruptive innovation, shouldn’t we do more than simply hope that “…eventually we’ll get this right?”

The past certainly repeats itself, which explains why every 20 years bell-bottoms come back in style…but ignoring the differences in application, however incremental, is a bad idea. In many regards we have not learned from our mistakes or fail to recognize patterns, but you can’t drive forward by only looking in the rear view mirror, either.

Regards,

/Hoff

There are numerous cliches and buzzwords we hear daily that creep into our lexicon without warrant of origin or meaning.

There are numerous cliches and buzzwords we hear daily that creep into our lexicon without warrant of origin or meaning.

I was reading a

I was reading a  Time to stick my neck outside my shell again…

Time to stick my neck outside my shell again… There’s networking at almost every substrate level — in the physical and virtual construct. In our never-ending quest to balance performance, agility, resiliency and security, we’re ending up with a trade-off I call simplexity: the most complex simplicity in networking we’ve ever seen. I wrote about this in a blog post last year titled “

There’s networking at almost every substrate level — in the physical and virtual construct. In our never-ending quest to balance performance, agility, resiliency and security, we’re ending up with a trade-off I call simplexity: the most complex simplicity in networking we’ve ever seen. I wrote about this in a blog post last year titled “ Over the last few weeks I’ve listened intently to many Cloud Computing discussions and presentations. I’ve read many blogs. I’ve listened to many podcasts.

Over the last few weeks I’ve listened intently to many Cloud Computing discussions and presentations. I’ve read many blogs. I’ve listened to many podcasts.

Recent Comments